AI

AI

AI

AI

AI

AI

Startup RapidFire AI Inc. today released an open-source software package aimed at simplifying the development of the pipelines that are increasingly central to enterprise artificial intelligence applications.

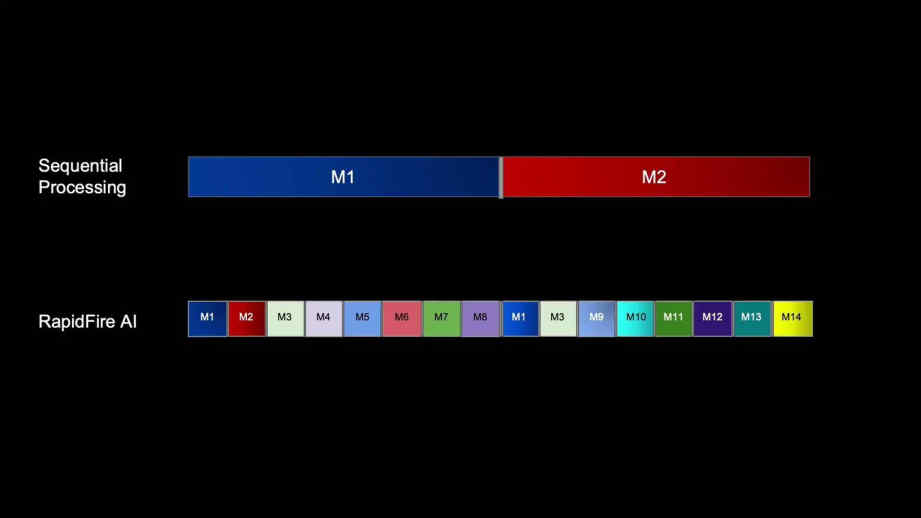

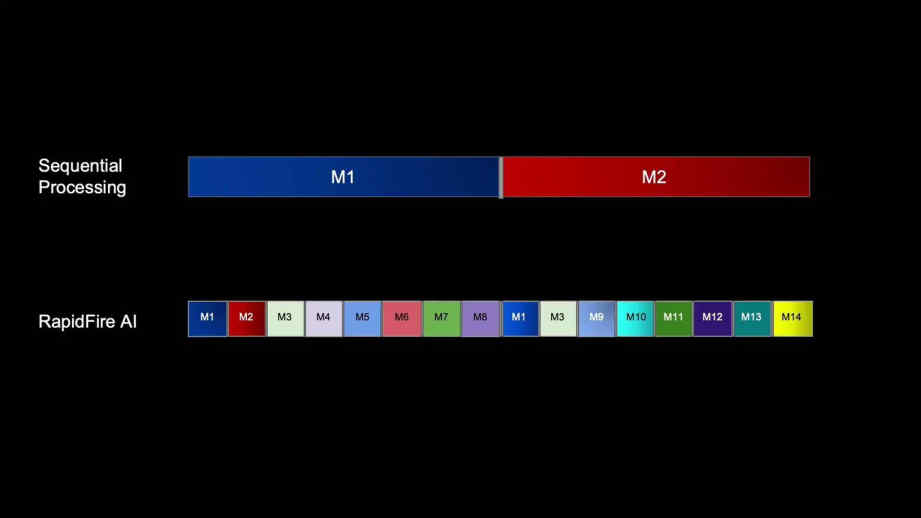

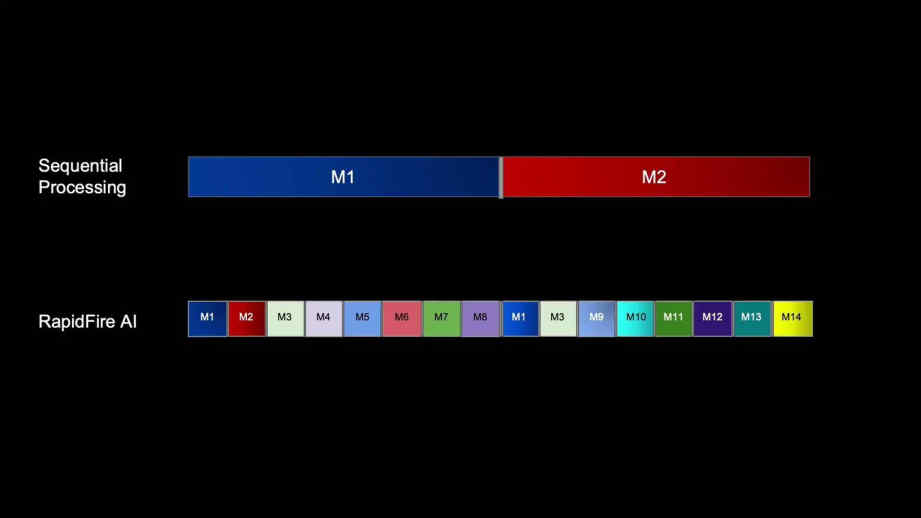

The package, RapidFire AI RAG, extends the company’s “hyperparallel experimentation framework” to allow developers to simultaneously test and evaluate different configurations of chunking, which divides large documents into smaller pieces, as well as retrieval techniques and prompting schemes. Those processes are typically conducted sequentially, but RapidFire’s technology allows many streams to run in parallel.

As generative AI applications expand, developers are seeking more robust ways to evaluate and customize performance, said co-founder and Chief Technology Officer Arun Kumar, who developed the parallelization software while teaching at the University of California at San Diego. Evaluation metrics, often domain-specific and sometimes automated using smaller language models, have matured significantly in the last year.

However, practical applications haven’t necessarily kept up with technology. “In enterprise AI, the hard part isn’t building the pipeline; it’s knowing which combination of retrieval, chunking and prompts delivers trustworthy answers,” Madison May, chief technology officer of Indico Data Solutions Inc., said in a statement.

RapidFire AI RAG supports real-time control, monitoring and automatic optimization of multiple RAG experiments, even when run on a single machine. The system dynamically distributes compute resources or token usage limits across configurations, depending on whether the user is working with self-hosted models or closed model application programming interfaces.

Organizations often underestimate the complexity of RAG workflows, said Jack Norris, co-founder and chief executive officer of RapidFire AI.

“They are not going to drive differentiation from the models, which are basically commodities,” he said. “It’s how they best leverage their data.”

Kumar said many teams fail to account for the multiple interacting variables that determine model performance. “People just brush under the carpet that there are these gazillion knobs in RAG,” he said. “How do you chunk the data? How do you embed it? How do you retrieve it? How do you re-rank it? Every one of those interacts in nontrivial ways and can affect your evaluation metrics.”

Kumar said that, by some estimates, 90% of RAG prototypes fail to reach production due to lapses in testing such variables.

The company’s approach centers on what it terms “hyper parallelization” (pictured), a method for swapping configurations in and out of limited hardware resources, such as graphics processing units, using shared memory techniques. This allows multiple experiments to run in parallel, delivering results faster.

“We swap configs in and out of GPUs automatically in a very efficient manner,” Kumar said. “This basically allows you to get a sample of all the configurations across shards of data.”

The system also supports dynamic experiment control, which allows users to stop, clone or modify experiments mid-run. A forthcoming update will add AutoML support for automated optimization of cost or performance.

RapidFire AI RAG integrates with the LangChain framework for agentic workflows. It supports a mix of large language models from OpenAI LLC, Anthropic PBC, Hugging Face Inc., self-hosted re-rankers and a variety of search backends. It supports both document preprocessing and query processing and can execute experiments more efficiently by collapsing redundant operations across configurations, a concept known in database engineering as multiquery optimization.

The tool has been downloaded more than 1,000 times since a soft launch one month ago, and some design partners are already testing it internally, Norris said. It’s available now via pip install rapidfireai-rag.

The company plans to monetize the tool through a premium commercial tier and software-as-a-service offerings in the future.

“Our focus right now is on open source and getting it out there and working with organizations like Hugging Face,” Norris said.

RapidFire AI has raised $4 million in preseed funding. from 406 Ventures LLC, AI Ventures Management LLC, Osage University Partners Management Co. LLC and Willowtree Investments.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.